For a long time, networking was defined by some distributed protocols like BGP, OSPF, MPLS, STP and so on. Each network device in the topology would run these protocols and collectively they made the internet work. They accomplished the miraculous job of connecting the plethora of devices that make up the internet. However, the amount of effort required to configure, troubleshoot and maintain these devices was enormous. Add to that the cost of upgrading these devices every few years. Collectively, these costs compelled the networking industry to come up with a solution to these problems.

SDN was introduced few decades back. The concept of separating the brain from the device was a radical idea which spread very fast across the networking industry. SDN introduced centralized control to the network. Hence, whole of the network can now be controlled from a single device. This centralized controller evaluates the entire topology and pushes down instructions to individual device thus making sure that each device is working as efficiently as possible. The SDN controller is also able to single-handedly track the resource utilization and respond to failure thus minimizing the down time.

SDN simplified networking to a great extent. However, storage which is complementary to networking was still implemented in the same old way at that time. Coho Data, which is based out of Sunnyvale California took the effort to redefine storage using the concept of software defined networking. It has introduced a control-centric-architecture to storage.

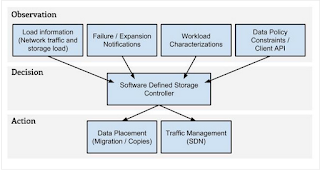

Here's a graphical representation of how the storage controller looks like:

SDN was introduced few decades back. The concept of separating the brain from the device was a radical idea which spread very fast across the networking industry. SDN introduced centralized control to the network. Hence, whole of the network can now be controlled from a single device. This centralized controller evaluates the entire topology and pushes down instructions to individual device thus making sure that each device is working as efficiently as possible. The SDN controller is also able to single-handedly track the resource utilization and respond to failure thus minimizing the down time.

SDN simplified networking to a great extent. However, storage which is complementary to networking was still implemented in the same old way at that time. Coho Data, which is based out of Sunnyvale California took the effort to redefine storage using the concept of software defined networking. It has introduced a control-centric-architecture to storage.

Here's a graphical representation of how the storage controller looks like:

SDSC (Software Defined Storage Controller) is the central decision making engine that runs within the Coho Cluster. It evaluates the system and makes decisions regarding two specific points of control data placement and connectivity. At any point, the SDSC can respond to change by either moving client connections or by migrating data. These two knobs turn out to be remarkably powerful tools in making the system perform well.

The strong aspect of this solution is its modular nature. Not only the storage device are completely new and innovative, innovation has also been done in the switching fabric thus facilitating the migration of data. The solution makes sure that performance is not degraded when the storage capacity scales.

Tiering in Coho Architecture:

Coho’s microarrays are directly responsible for implementing automatic tiering of data that is stored on them. Tiering happens in response to workload characteristics, but a simple characterization of what happens is that as the PCIe flash device fills up, the coldest data is written out to the lower tier. This is illustrated in the diagram below.

All new data writes go to NVMe flash. Effectively, this top tier of flash has the ability to act as an enormous write buffer, with the potential to absorb burst writes that are literally terabytes in size. Data in this top tier is stored sparsely at a variable block size.

As data in the top layer of flash ages and that layer fills, Coho’s operating environment (called Coast) actively migrates cold data to the lower tiers within the microarray. The policy for this demotion is device-specific: on our hybrid (HDD-backed) DataStore nodes, data is consolidated into linear 512K regions and written out as large chunks. On repeated access, or when analysis tells us that access is predictive of future re-access, disk-based data is “promoted,” or copied back into flash so that additional reads to the chunk are served faster.

Source :http://www.cohodata.com/blog/2015/03/18/software-defined-storage/

No comments:

Post a Comment